http://arxiv.org/abs/0908.2644

While looking for more information on how Hamiltonian / Lagrangian formalism apply to machine learning (there was a paper on natural language processing I read earlier that used a Lagr similar to modern QFT, will post later) and found this.

A given set of data-points in some feature space may be associated with a Schrodinger equation whose potential is determined by the data. This is known to lead to good clustering solutions. Here we extend this approach into a full-fledged dynamical scheme using a time-dependent Schrodinger equation. Moreover, we approximate this Hamiltonian formalism by a truncated calculation within a set of Gaussian wave functions (coherent states) centered around the original points. This allows for analytic evaluation of the time evolution of all such states, opening up the possibility of exploration of relationships among data-points through observation of varying dynamical-distances among points and convergence of points into clusters. This formalism may be further supplemented by preprocessing, such as dimensional reduction through singular value decomposition or feature filtering.

While looking for more information on how Hamiltonian / Lagrangian formalism apply to machine learning (there was a paper on natural language processing I read earlier that used a Lagr similar to modern QFT, will post later) and found this.

The graphs below are the solution of a clustering

problem. I.e. separating out different data points based on how similar

they are to previous points.

In this solution, they define a potential function

using the entire dataset as an input (It's a trivial relation in a short equation early in the paper.) Then they define a Hamiltonian from the Schrodinger equation and when you combine that with

the potential you get this effect where different clusters 'interfere'

with each-other just like waves!

The more simple and commonly used Gaussian clustering is best analogised with the probabilistic heat equation, which lacks the ability to account for effects that the Schrodinger equation supplies. This DCQ technique is therefore much superior to Gaussian kernel clustering.

And it appears more complex forms of the Lagrangian than the Schrodinger formulation also appear to be relevant to clustering research. QFT / QCD Lagrangians specifically.

The more simple and commonly used Gaussian clustering is best analogised with the probabilistic heat equation, which lacks the ability to account for effects that the Schrodinger equation supplies. This DCQ technique is therefore much superior to Gaussian kernel clustering.

And it appears more complex forms of the Lagrangian than the Schrodinger formulation also appear to be relevant to clustering research. QFT / QCD Lagrangians specifically.

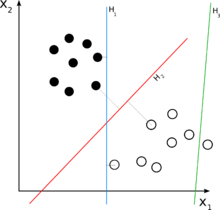

For those unfamiliar with Gaussian kernel clustering.

This diagram represents the easiest

possible cluster classification problem. By using the distance of all

the points relative to the origin, I can linearly separate them easily

with a line. Forget about the equations behind it, it is just obvious

that it works visually.

What if there is no line however to separate them

linearly without cutting out some? Well you need a curvy, function to separate them smoothly.

You can see there that first line is all curvy. It can be approximated by a sum of Gaussians based on the x,y locations of the points. Forget about the equations and look at it visually. It is obvious there

is a relationship between the x,y locations of all those points and the

curviness of the line. The line tries to stay close to all the points.

The second picture is the result of taking that

Gaussian function, applying a transformation to the vector space it

resides in s.t. it turns into a straight line. This distorts the

original x,y space into new transformed space where the separation is

linear!

So the difference between the classical Heat equations and the

Schrodinger equation, is analogous to the difference between the Gaussian sorting technique and this new

Schrodinger sorting formulation.

You'll notice the Gaussian technique would probably

not work as well in the situation where there are no clear boundaries

where a line can be drawn. If some points are on the wrong side of the

line, there would be problems in sorting.

So what the Schrodinger thing does to improve this

quandry is accounting for interference in the same way that the

Schrod accounts for interference better than the Heat equation. Basically, de Broglie's connection of momentum with energy transforms the classical momentum p = mv to i*p. And so the diffusion coefficient picks up imaginary character, and hence, interference effects not seen in the classical heat equations (with Gaussian solutions.)

So in the beginning of the paper the author describes the diffusion equation as being

analogous to finding the Gaussian kernel (that squiggly line) because

you're just looking for the nearest points basically, i.e. imagine if

they 'diffused outward' they would diffuse along that squiggly line. A visual analogy of waves of heat diffusing from each one of the points would give you a common wavefront equal to that squiggly Gaussian line.

So we know that the diffusion waves and the Schrodinger

probability amplitude waves behave wildly differently. One is a

probability, the other is a probability amplitude (since i is involved

in the Schrod DE.) Prob. amplitudes exhibit self-interference and all

that spooky business, and it turns out that really helps with clustering problems. Which is pretty strange.

In fact, it frequently turns out that a concentrationof data-points will lead to a local minimum in V , even if ψ does not display a local maximum. Thus, by replacing theproblem of finding maxima of the Parzen estimator by the problem of locating the minima of the associated potential,V (~x), we simplify the process of identifying clusters. The effectiveness of this approach has been demonstrated inthe work by Horn and Gottlieb[5, 13]. It should be noted that the enhancement of features obtained by applyingEq.4 comes from the interplay of two effects: attraction of the wave-function to the minima of V and spreading ofthe wave-function due to the second derivative (kinetic term). This may be viewed as an alternative model to theconventional probabilistic approach, incorporating attraction to cluster-centers and creation of noise, both inferredfrom - or realized by - the given experimental data.DQC drops the probabilistic interpretation of ψ and replaces it by that of a probability-amplitude, as customary inQuantum Mechanics. DQC is set up to associate data-points with cluster centers in a natural fashion. Whereas in QCthis association was done by finding their loci on the slopes of V , here we follow the quantum-mechanical temporalevolvement of states associated with these points. Specifically, we will view each data-point as the expectation valueof the position operator in a Gaussian wave-function ψi(~x) = e−(~x−~xi)22 2 ; the temporal development of this state tracesthe association of the data-point it represents with the minima of V (~x) and thus, with the other data-points.

So instead of just diffusing

outward from the points to find the separation boundary, we

'imaginarily diffuse' like a probability amplitude outward like an

electron does. That imaginary diffusion leads to interference and some

kinds of noise effects. Basically, instead of the points of the cluster adding

up together to build the squiggly line with probability, they do it with

probability amplitude. This makes certain clusters 'push' other

clusters away instead of just adding up the clusters and finding their

closest Gaussian divider.

Gaussian kernel separation graphs look weak in comparison.

I've been searching through arxiv for machine

learning AND lagrangian (and ML subject etc.) and there are a bunch of

really strange(read:interesting) papers about similar applications. Apparently investigating the more modern wave equations is an active area of research.

A matlab implementation of that Schrodinger clustering algorithm along with some different and interesting papers is below. There really isn't anything to get from the papers other than some examples and the equation for the potential, wave function, and Schrod eq, but there are lots of pictures.

http://horn.tau.ac.il/QC.htm

Especially the pictures in this one https://docs.google.com/

There was also a stackoverflow post on this subject (will post link later) but it appears there are no implementations of any DCQ (Schrodinger or beyond) in Python or really anything but this simple Matlab implementation. The equations are trivial though and exist in scipy so it could easily be ported and made more user-friendly / documented ala FANN / variants.

A matlab implementation of that Schrodinger clustering algorithm along with some different and interesting papers is below. There really isn't anything to get from the papers other than some examples and the equation for the potential, wave function, and Schrod eq, but there are lots of pictures.

http://horn.tau.ac.il/QC.htm

Especially the pictures in this one https://docs.google.com/

There was also a stackoverflow post on this subject (will post link later) but it appears there are no implementations of any DCQ (Schrodinger or beyond) in Python or really anything but this simple Matlab implementation. The equations are trivial though and exist in scipy so it could easily be ported and made more user-friendly / documented ala FANN / variants.